Definition

AI architecture that enhances large language models. The goal is to provide more accurate, up-to-date and contextually relevant responses while reducing hallucinations.

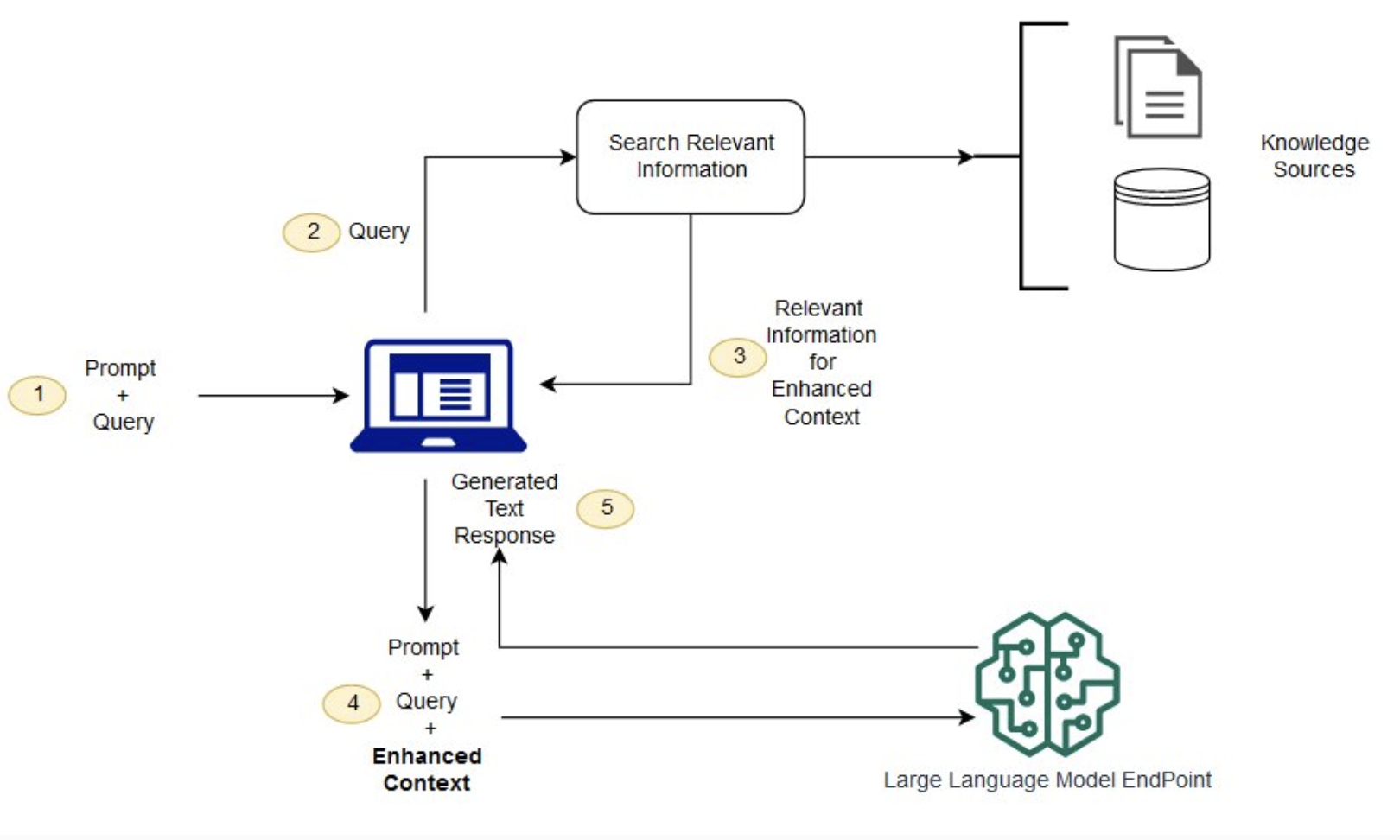

Stages:

- Retrieval Stage:

User submits a query, RAG searches a knowledge base for relevant information. - Augmentation Stage:

The retrieved information is added to the users original query as context - Generation Stage: The LLM generated a response grounded in the retrieved context, not just its pre-trained knowledge.

Retrieval:

- Dense retrievers: Create dense vector embeddings of the text. Works best, when the meaning of the text is more important than the exact working, since the embeddings capture semantic similarities.

- Sparse retrievers: Term matching techniques like TF-IDF. Excel at finding documents with exact keyword matches.