Idea: Propagate the prediction backwards through the network to find out which neurons/inputs are the most relevant for the output (or simply one output class). The propagation goes from the output layer to the input layer. So Backwards. We select one output neuron (usually a class) and try to find the input tokens, that are responsible for that output.

We do not propagate the gradient but relevance. How relevance is calculated is explained here: Layerwise Relevance Propagation#Relevance Definition

j: neuron index further towards the input

k: neuron index further towards the output

Relevance Definition

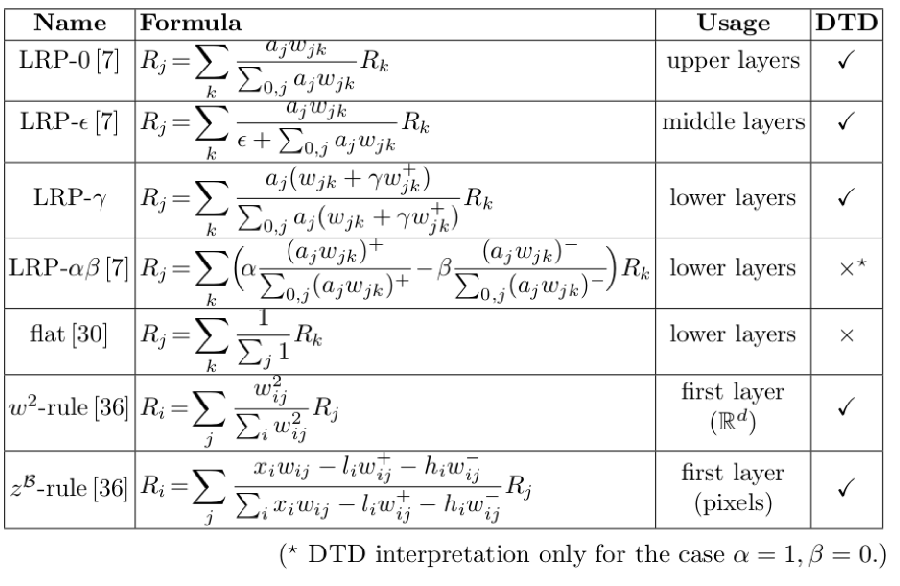

Relevance does not mean weight!! It is closely linked though. There are multiple possible Rules. These can be seen as "regularisations", mostly to reduce the noise/complexity of the produced explanations.

- LRP-0: Basic

= , simply going backwards through the NN. Gives an explanation equivalent to Gradient * Input. This is undesirable because gradients are very noisy - LRP-𝟄: Epsilon

Add a small termto the denominator. This aims to reduce the gradient noise by reducing the relevance of small contributions.

, notice the at the denominator. - LRP-𝞬: Gamma

Try to improve stability by limiting how large positive or negative relevances can become. Adds a multiplication factor to.

, Notice the little plus above . It signifies

negative contributions get punished the higherbecomes. Small contributions get punished as well.

The explanation itself can be a heatmap of the pixels relevant for the activation of one output Neuron (class).

How to choose the right rule

LRP rules are set differently at different layers.

This was mostly found out via heuristic methods, but the results are actually based on deep taylor decomposition. Taylor decomposition simplifies each layer into the sum of functions that approximate it.

Example: why use LRP-0 in the upper layer:

- Simplicity: These layers are very simple, and not subjected to the instability and interpretability issues of the lower layers. Therefore LRP-0 is sufficient.