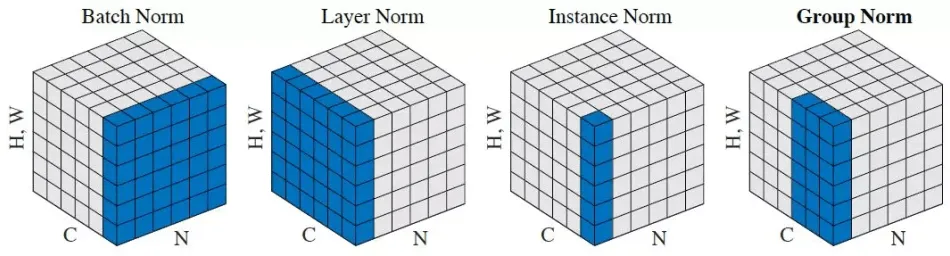

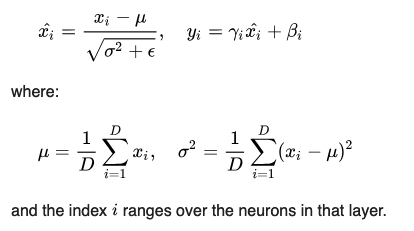

Layer normalization function in a very similar way as BatchNorm, except that we do not calculate the mean and std between batches, but we. calculate the mean and std within each sample. Not for each channel, but for all values within the sample. As if the sample was flattened into a vector and we normalize this vector.

N: samples

C: Channels

H, W: height, width

How does it work?

mean and std within each sample (not aggregated)

When to use

Use if normalising across features (as opposed to each feature individually) makes sense. This is the case for small batches where it makes sense to compare feature 1 to feature 2. If for example they are describing the same thing or feature.

However if this does not make sense, if the features do not describe the same type of data for example, then I would recommend to use Instance Normalization

Implementation

{python}torch.nn.LayerNorm(normalized_shape)

The normalized shape specifies the dimensions of the input tensor to normalize over. The point is to tell the layer, which dimension a datapoint has, so that it doesn't normalize across multiple datapoints. This is necessary if we have small batches.

How to set the normalized shape:

- input shape: (batch_size, features) -> normalized shape: (features,)

- input shape: (batch_size, channels, height, width) -> (channels, height, width)