Both approaches are not mutually exclusive

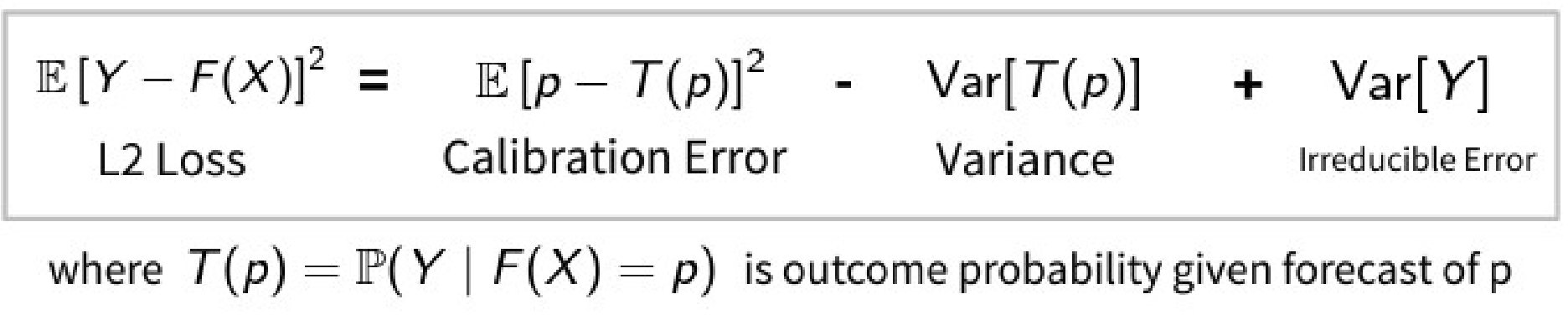

Via the Loss function

A loss function will try to:

- Minimize Calibration error

- Maximize sharpness

Via a post processing step.

Another way to fix calibration errors, is in a post processing step. Find some function, that maps the predictions onto more calibrated predictions. Computationally less expensive than to constantly tweak the loss function.

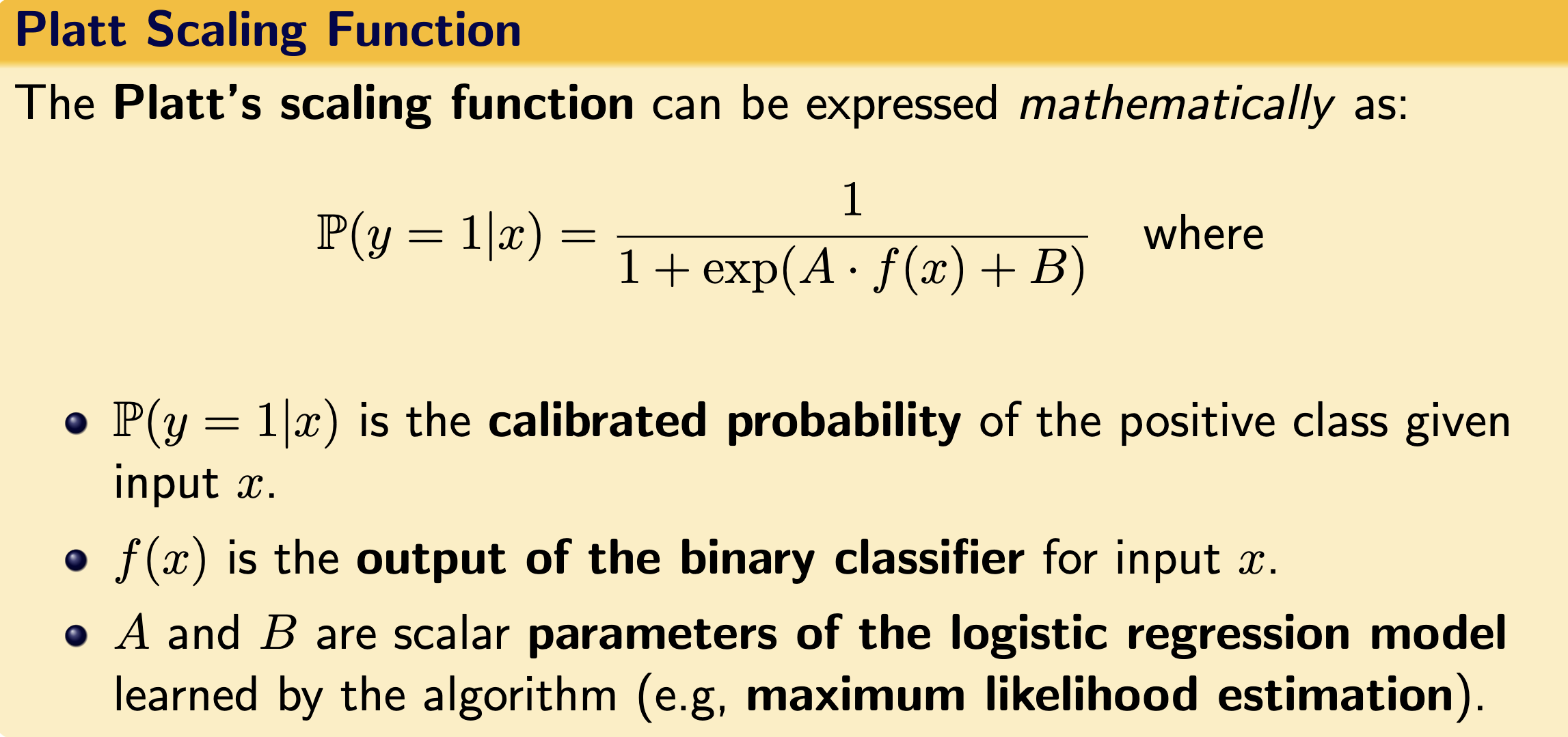

Example: Platt scaling method:

apply sigmoidal transformation to the models output whose parameters are learnt during maximum likelihood estimation.