Please explain what a confusion matrix is.

?

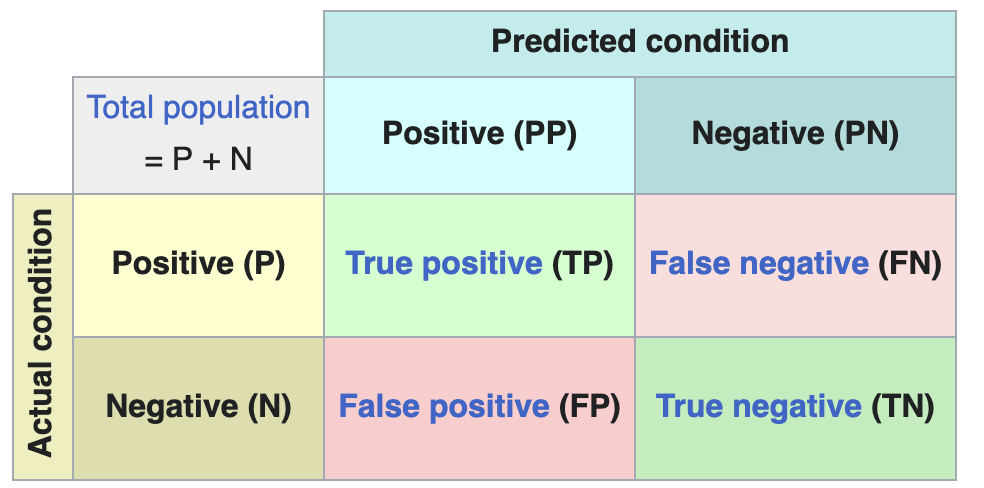

Confusion matrix

The confusion matrix is a representation of Model classification metrics.

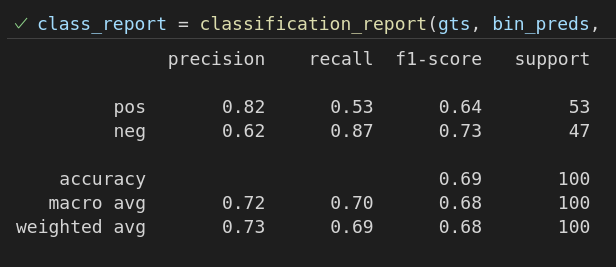

Implementation

from sklearn.metrics import classification_report

y_bin_preds = np.array(y_pred) > 0.5

print("Confusion Matrix:\n", classification_report(y_test, y_bin_preds, target_names=["pos", "neg"])) # target names: 0, 1, 2, ..., str labels in that order

Please provide the formulas for Accuracy, Precision and Recall. Please also explain when to use each.

?

Formulas

| Metric | Formula | Description | Pros / Cons / Use Case |

|---|---|---|---|

| Accuracy | The proportion of total predictions that were correct. See implementation example. | Simple but it is misleading if the dataset is imbalanced! | |

| Precision | The proportion of positive predictions that were actually correct. | Use when false positives are costly. Example: Spam detection | |

| Recall | The proportion of actual positives that were correctly identified. | Use when false negatives are costly. Example: medical diagnose | |

| F1 Score | A balance of Precision and Recall, all in one metric. | Considers both FP and FN. If the model fails in either direction, it will give a bad score; ideal for imbalanced datasets. | |

| If you are interested in more than the True/False prediction, you want predictions that take the models performance into account. If these are differentiable, then they can be and are used as Loss functions |

Please provide the formula for the f1 score and when to use it.

?

Formulas

| Metric | Formula | Description | Pros / Cons / Use Case |

|---|---|---|---|

| Accuracy | The proportion of total predictions that were correct. See implementation example. | Simple but it is misleading if the dataset is imbalanced! | |

| Precision | The proportion of positive predictions that were actually correct. | Use when false positives are costly. Example: Spam detection | |

| Recall | The proportion of actual positives that were correctly identified. | Use when false negatives are costly. Example: medical diagnose | |

| F1 Score | A balance of Precision and Recall, all in one metric. | Considers both FP and FN. If the model fails in either direction, it will give a bad score; ideal for imbalanced datasets. | |

| If you are interested in more than the True/False prediction, you want predictions that take the models performance into account. If these are differentiable, then they can be and are used as Loss functions |