Bayesian Neural Networks return not only a prediction value but also a confidence interval, how certain it is of its prediction.

Simple example:

Input: Size of the house:

Bayesian Neural Network output:

- predicted house price (

): 300 000€ - Uncertainty (

): 20 000€

How they work

Simplified

Weights and biases are distributions with a mean and a variance. For each prediction a value is sampled for each weight/bias term. We repeat this n times to be able to give a prediction distribution.

In more details:

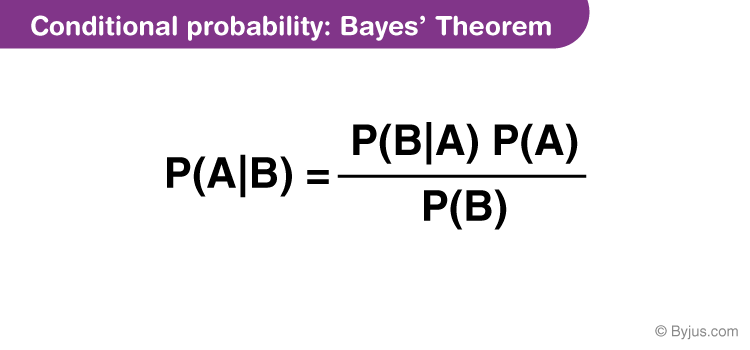

A bayesian neural network is an acyclical directed graph where each node represents a variable in the bayesian sense. Each node being a probability distribution, it takes as input its "parents", (Parents are just the nodes before it that are connected to it) values. Using these inputs/parent the probability distribution can be adapted to incoporate the "prior" using Bayes theorem.

These Inferences can be learnt during training via algorithms like Expectation–maximization algorithm.